United Kingdom - Pro-Innovation approach to AI regulation

This white paper by the UK government sets out the UK’s pro-innovation approach to AI regulation. The government does not intend to create new legislation for AI-systems and risks but to establish cross-cutting principles for regulators to follow as well as central functions that should enable the UK to balance promoting innovation with effective, proportionate responses to the risks of AI systems.

What: legislative/policy proposal

Impact score: 2

For who: policy makers, regulators, companies developing and using AI-systems, persons affected by AI systems

URL: https://www.gov.uk/government/publications/ai-regulation-a-pro-innovation-approach

Key takeaways for Flanders:

- Importers and distributors of AI systems from the UK in the EU/Flanders should ensure that those systems also comply with (upcoming) EU AI regulation, since similar obligations will not be imposed by the UK.

- EU policy makers should consider to what extent the UK pro-innovation approach will convince AI developers to develop systems in the UK instead of in the EU and if they should incentivize AI developers to continue to work in the EU as a result.

- Policy makers in Belgium, as well as at the EU level, may take inspiration from the UK’s approach to identify (and possibly codify) principles that can be used to resolve any gaps or unclarities in the enforcement or interpretation of upcoming legal frameworks for AI systems (e.g. to keep up with new technological developments).

Summary

Objectives, content and key elements

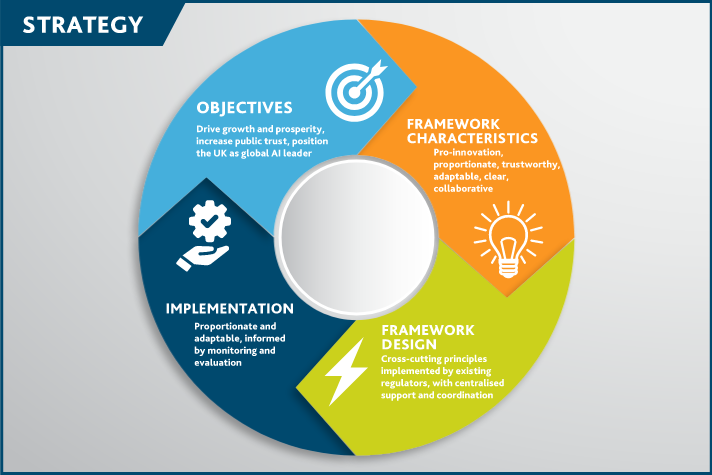

With its pro-innovation approach to AI regulation, the UK government aims to address the risks posed by AI systems and increase public trust in such systems. At the same time, it also aims to support innovation by making its regulation proportionate and reducing legal uncertainty. Therefore, the UK will initially not adopt any new legislative action under the framework. Instead it will rely on its existing laws and regulators with their existing standards, guidance and tools. These existing measures will be supported by five new principles cross-cutting the existing patchwork of legal regimes. Existing regulators will, initially on a non-statutory basis, implement these principles in practice and in specific contexts where AI-systems are used. The government’s approach is iterative and will be evaluated and further developed over time. It therefore does not exclude that new legislation (such as a legal duty for the regulators to make use of the principles) will be introduced in the future but will currently not take any action in that regard. In summary, the proposed regulatory framework is a principles-based framework for regulators to apply to AI regulation within their competences. The essential characteristics of the framework are that it will be pro-innovation, proportionate, trustworthy, adaptable, clear and innovative.

Four key elements make up the UK pro-innovation approach:

- A definition of AI to support regulator’s coordination;

- The use of a context-specific approach to AI regulation;

- The aforementioned five cross-sectoral principles for AI to guide regulators and AI development; and

- New central functions in government to support the approach and ensure coherency.

Furthermore the whitepaper also discusses the UK’s approach to foundation models, a new regulatory sandbox, tools that will be used to support AI implementation (AI assurance techniques and technical standards) and a position on international engagement.

1. AI definition in the new framework

The UK’s new framework defines AI by the characteristics “adaptivity” (it is difficult for humans to discern their workings and forms of inference) and “autonomy” (AI systems can make decisions without intent or ongoing control by a human). Because of these characteristics, the outputs of a system are difficult to explain, predict or control and responsibility in relation to the system is difficult to allocate. With this definition, the UK government also hopes to include unanticipated new technologies that are autonomous and adaptive in its approach. Aside from these two characteristics, no strict legal definition of AI is adopted. The approach expects that regulators will adapt domain-specific definitions of AI based on these two characteristics and it prepares to coordinate such definitions to ensure that they align. The government also mentions that it retains the ability to define AI later if necessary for the pro-innovation framework.

2. A context-specific approach

The framework will not assign rules or risk levels to sectors or technologies but will instead focus on regulating the outcomes of AI applications in their context. In this context-specific approach, regulators will be expected to weigh the risks of using AI against the costs of missing opportunities by not using AI, including the failure to employ AI in AI risk assessments, in their enforcement actions.

3. Principles-based approach

Five cross-sectoral principles support the approach to enable responsible AI design, development and use. They must be applied proportionally by regulators when they implement the framework and issue guidance, although regulators have ample discretion in this aspect. The principles are:

- Safety, security and robustness – Systems should function in a robust, secure and safe way throughout their life cycle with continual risk assessment and management. This includes embedding resilience to security threats into the system. Regulators may introduce measures to ensure technical security and reliability. Regulators should also coordinate their regulatory activities and may require regular testing or the use of certain technical standards.

- Appropriate transparency and explainability – Appropriate information (such as when, how and why AI systems are used) should be communicated to the relevant people and it should be possible for relevant parties to access, interpret and understand the decision-making of the AI-system. Regulators must have enough information about the system, and its inputs and outputs to apply the other principles (e.g. accountability). Parties directly affected by the system should also have enough information to enforce their rights. Regulatory guidance, product labelling and technical standards may support this principle in practice. The level of explainability provided should be appropriate to the context of the AI system, including its risks and the state of the art as well as the target audience.

- Fairness – AI systems may not undermine legal rights of individuals and organisation, may not unfairly discriminate or cause unfair market outcomes. Regulators may develop and publish descriptions of fairness for AI systems in their own domain, taking into account applicable law, as well as guidance for relevant law, regulation and standards. Regulators will also need to ensure that AI systems in their domain follow the fairness principle and may need to develop joint guidance with each other if their domains intersect.

- Accountability and governance – There should be effective oversight over the supply and use of AI systems with clear lines of accountability, meaning that the appropriate measures are taken to ensure proper functioning of the AI systems throughout their lifetime. This includes that AI life cycle actors must implement the principles at all stages of the AI system’s life cycle. Regulators need to create clear expectations for compliance and good practice, and may encourage the use of governance procedures to meet these requirements. Regulatory guidance must reflect the responsibility to demonstrate accountability and governance as well.

- Contestability and redress – Users, impacted parties and actors in the AI life cycle must be able to contest an AI decision that is harmful or creates a risk of harm. Regulators must clarify existing means of contestability and redress and ensure that the outcomes of AI systems are contestable where appropriate. Regulators should also guide regulated entities to ensure clear routes for affected parties to contest AI outcomes or decisions.

The UK government expects regulators to issue or update guidance following the principles and will monitor the effectiveness of the principles and the framework. The principles will currently not be included in legislation, although the UK government is prepared to include a duty for regulators to have due regard for the principles if necessary for the correct application of the principles. This would provide those regulators with a statutory basis to apply the principles in their work and allow them discretion in determining the relevance of each principle for their domain. Broader legislative changes may also be made if necessary for the application of the principles (e.g. if an existing legal requirement would otherwise block a regulator from applying them). Regulators, either alone or in collaboration, are expected to prioritise among the principles if principles come into conflict with each other. If an AI-risk does not fall clearly within the competences of an existing regulator, regulators and government will collaborate to identify further actions (e.g. changes to competences, legislative interventions etc.). Regulators, in collaboration with government, will further develop guidance for themselves to interpret the principles.

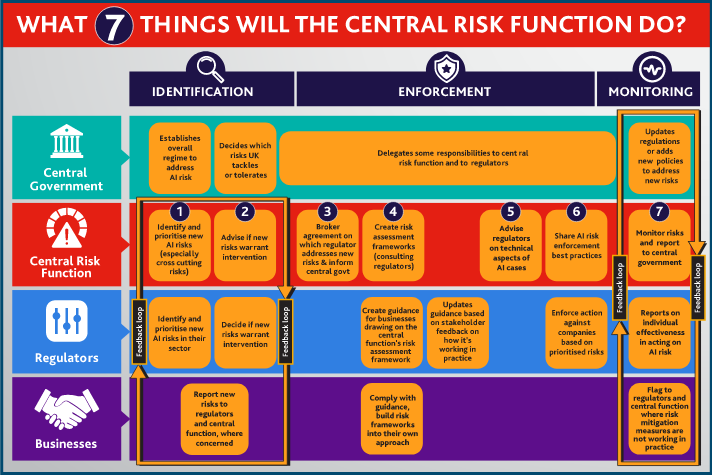

4. Central functions and accountability

Aside from the five cross-principles, the UK government will also put in place and provide new central functions to support the framework and the work of regulators. The functions notably include:

- monitoring, assessment and feedback – Monitoring and evaluating the new regime, gathering data to assess the effectiveness of the framework, supporting regulators in their own monitoring and evaluation as well as providing advice to ministers on issues to be addressed.

- Supporting coherent implementation of the principles – Developing guidance to support regulators in implementing the principles, identifying obstacles affecting regulators in their implementation of the principles, evaluating conflicts in how the principles are interpreted by different regulators and monitoring the relevance of the principles.

- Cross-sectoral risk assessment – developing a register for AI risks to support regulators’ risk assessments, monitoring and reviewing known risks and identifying new risks, clarifying responsibilities for new risks or risks with conflicted responsibilities with regulators to ensure coherency and finding where risks are not covered adequately.

Other functions include support for innovators, education and awareness (for businesses, consumers and public), horizon scanning (for new trends and AI risks) and ensuring interoperability with international regulatory frameworks.

Of note is that the approach does not intervene, or propose to intervene, in the current legal framework for the accountability for AI systems. It considers such an intervention too soon and requires that the topic is first further explored before government considers changing its existing accountability and liability framework. However, if a need for change becomes clear, the government may still take additional actions to change the accountability regime.

Foundation models, AI sandboxes and tools for trustworthy AI

The UK government will pay special attention to how foundation models will interact with the approach. This will happen through its new central functions and its assessment of the current accountability regime. For Large Language Models (LLMs) in particular, the government recognizes that they fall in the scope of the new framework as foundation models but considers it premature to take regulatory actions towards LLMs or foundation models at the moment.

The UK government will also move forward with an AI (regulatory) sandbox. Initially, this sandbox pilot will focus on a single sector, multiple regulator model in which innovators can obtain advice from technology and regulation experts. In the future, the sandbox may shift to a multiple sector, multiple regulator model and may also allow for a test environment for product trails. Existing sandboxes by UK regulators (such as the DCRF or FCA) will not be affected by this additional initiative.

To further support the development of trustworthy AI, the UK government will launch a portfolio of AI assurance techniques in Spring 2023 in collaboration with industry. Furthermore, the UK government will also continue its support of technical standards as a complement to its approach on AI regulation.