Policy Prototyping the AI Act: Transparency requirements – workshop report

08.08.2023

The Knowledge Centre organized a workshop on 21 June to officially kick off its 'Policy Prototyping the AI Act: Transparency Requirements' project. A group of 18 interdisciplinary professionals worked on different prototypes for instructions for use (Art 13 AIA) and disclaimers (Art 52 AIA) based on five different use cases. The final result will be presented this autumn.

The EU emphasizes transparency as a fundamental value for the development, deployment, and utilization of AI systems. The topic was already given prominence in the policy documents that preceded the AI act, such as the Ethics Guidelines for Trustworthy AI, issued by the High-Level Expert Group on AI, or the White Paper on AI, issued by the European Commission. The AI Act continues that line. For this reason, the Knowledge Centre decided this year to focus its Policy Prototyping project on the transparency obligations in the EU AI Act proposal.

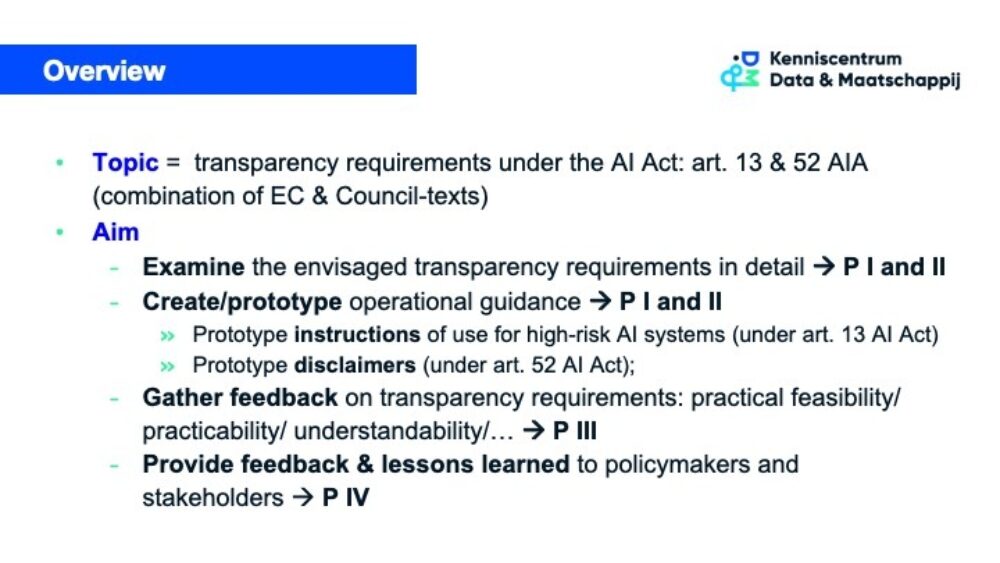

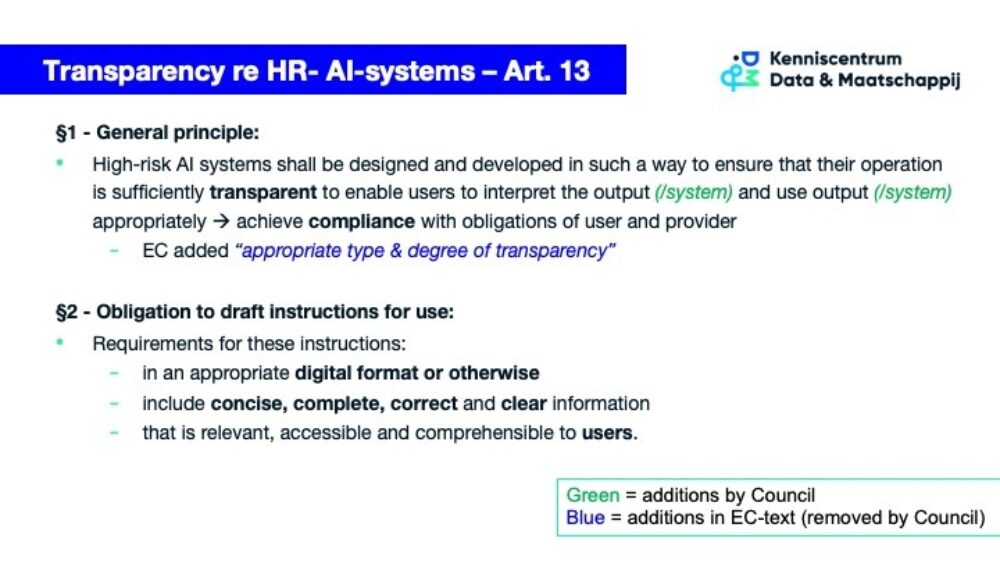

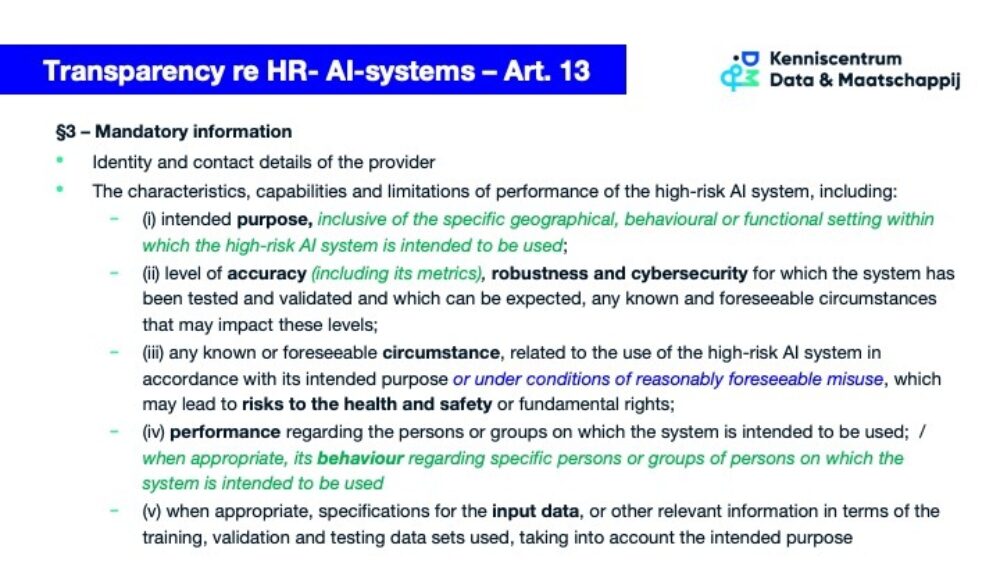

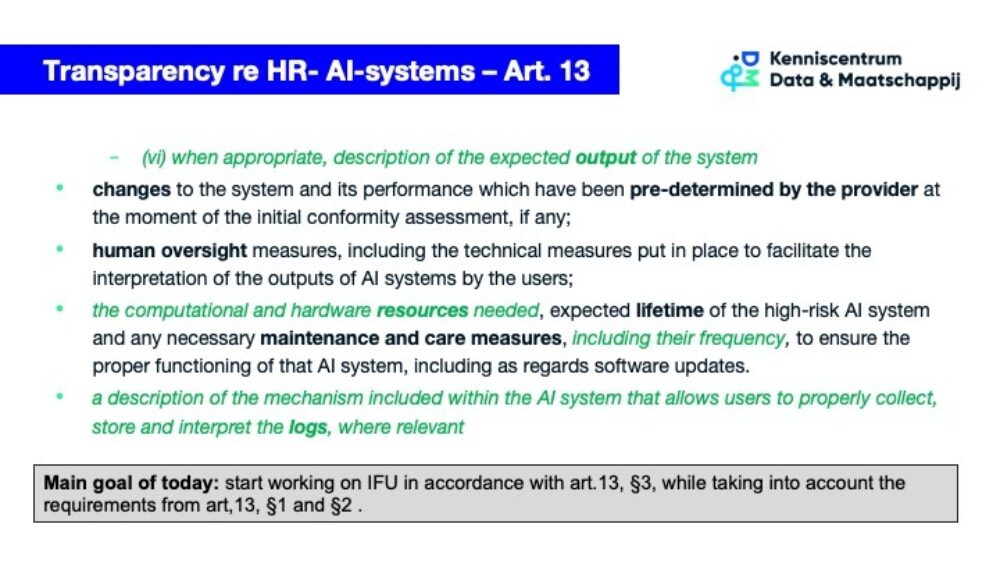

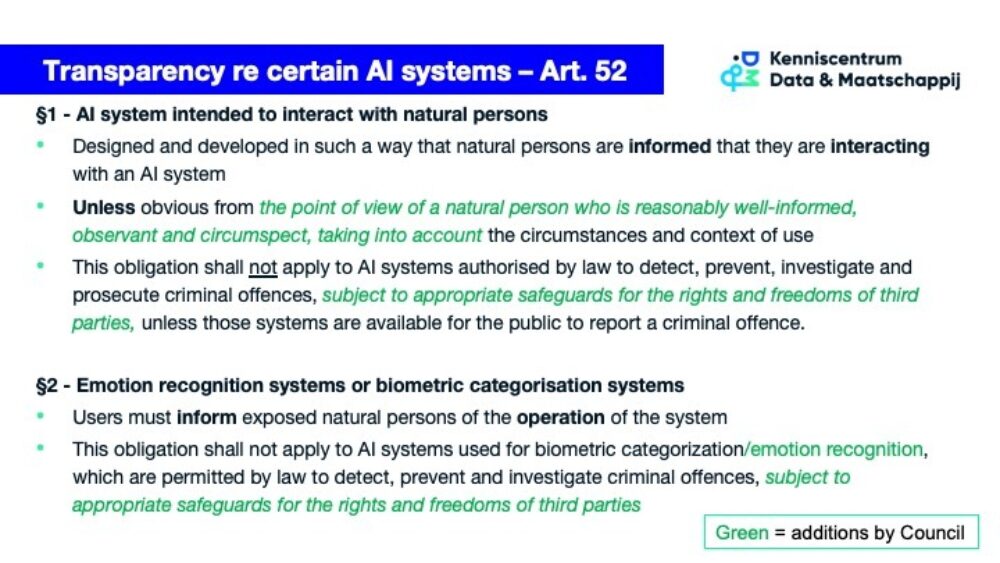

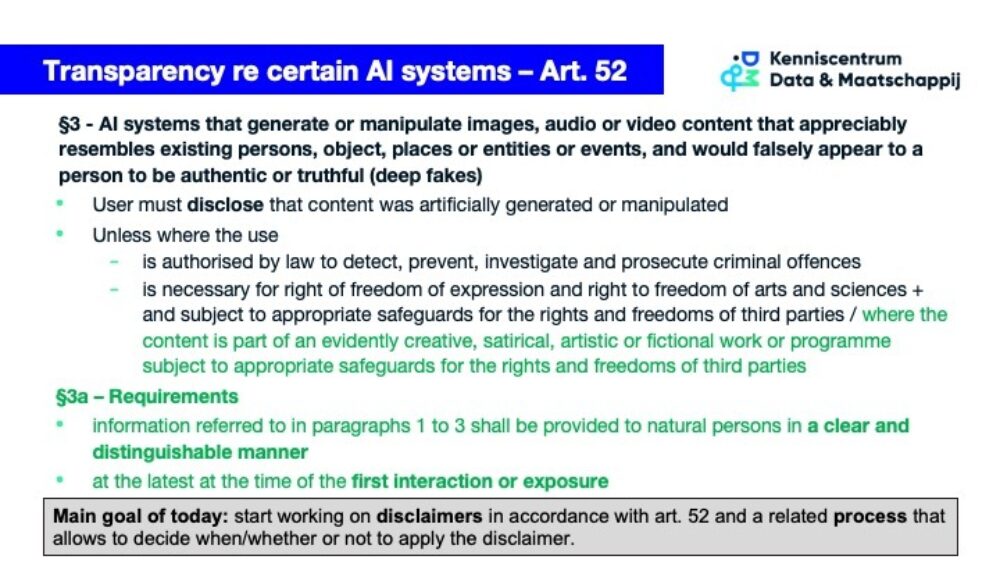

Those obligations are mainly translated into two articles in the regulation, namely Art. 13 'Transparency and provision of information to users' and Art. 52 'Transparency obligations for certain AI systems'. Through this project, the Knowledge Centre aims to examine the transparency obligations in detail and:

- Develop prototypes in the format of instructions for use for high-risk AI systems (under Art. 13 AIA) and disclaimers (under Art. 52 AIA).

- Collect feedback on the practical feasibility, practicability, and understandability of the transparency requirements.

- Provide feedback to policymakers and lessons learned to stakeholders.

What are the obligations in both articles?

The method

To gather feedback on transparency requirements, the Knowledge Centre organized a physical legal design workshop at the AI Experience Centre in Brussels. The 18 participants (professionals working with AI on a day-to-day basis and experts with experience in facilitating transparency and drafting policies) and 4 facilitators worked together for a whole day in five different groups to shape first versions of different prototypes. Through a legal design step process, the initial effort was to clarify transparency requirements in the context of a use case of a high-risk application (under the AI Act). This included identifying potential stakeholders of the use case and identifying pain points and opportunities. The next phase involved brainstorming and subsequently laying the first foundations of prototypes, considering a potential end-user or recipient.

Participants

A total of 21 people participated in the workshop: 4 facilitators from the Knowledge Centre, 8 representatives of AI-developers (incl. start-ups) and -users, 2 consultants, 4 legal experts, 2 representatives of civil society organisations and 1 academic researcher.

Use case 1 – Medical AI device for disease detection

The use case focuses on an AI tool that analyzes eye retina photos in order to assist in identifying/diagnosing certain, specific diseases, such as diabetes.

Use case 2 – Medical device

The use case focuses on an algorithm that can predict cardiac arrhythmias, enabling early detection and better follow-up of high-risk patients. The prediction is based on an electrocardiogram and additional values of blood pressure and cholesterol.

Use case 3 - Chatbot

The use case focused on a chatbot which employees can use to ask HR-related questions. The bot uses a collection of documents (e.g. HR documentation) to answer these questions.

Use case 4 - Deepfake

The use case focused on the use of deep fake technology in documentaries. The use of deep fake technology protects the anonymity of people involved, while also retaining facial expressions and emotions.

Use case 5 – HR Talent API

In this use case, a four-way talent matching API is used to connect job seekers with vacancies relevant to them. The AI system takes several criteria into account such as work experience, travel time, acquired skills and so on. The instructions of use prototype will be focused on users/deployers of the AI system.

Preliminary insights from the workshop

The operationalisation of both articles is very dependent on the context in which it is applied and on the recipients of the information. A thorough exploration of the target audience is necessary when preparing accompanying documentation.

The current wording in both articles makes it difficult to evaluate their implementation against the envisaged obligations/thresholds. Concrete implementation guidelines providing (linguistic) clarity would therefore be very helpful. Otherwise, many of the requirements will remain open for interpretation and lead to discussion (how much information is actually enough information?)

Some sectors may be able to rely on existing documentation under other legislation.

The workshop illustrated the importance of multidisciplinarity. The different participants interacted with each other, each from their own background. This turned out to be important when developing the preliminary prototypes.

Next steps

During the workshop, rough drafts of the prototypes were conceived. These drafts will now be further developed by experts from various groups in accordance with the AI Act. In the autumn, the Knowledge Centre will organise another online workshop (date to be announced) where the participants and other interested parties can give feedback on the developed prototypes. This should finally lead to a report presenting the prototypes and policy recommendations.